Understanding and Taming Deterministic Model Bit Flip Attacks in Deep Neural Networks

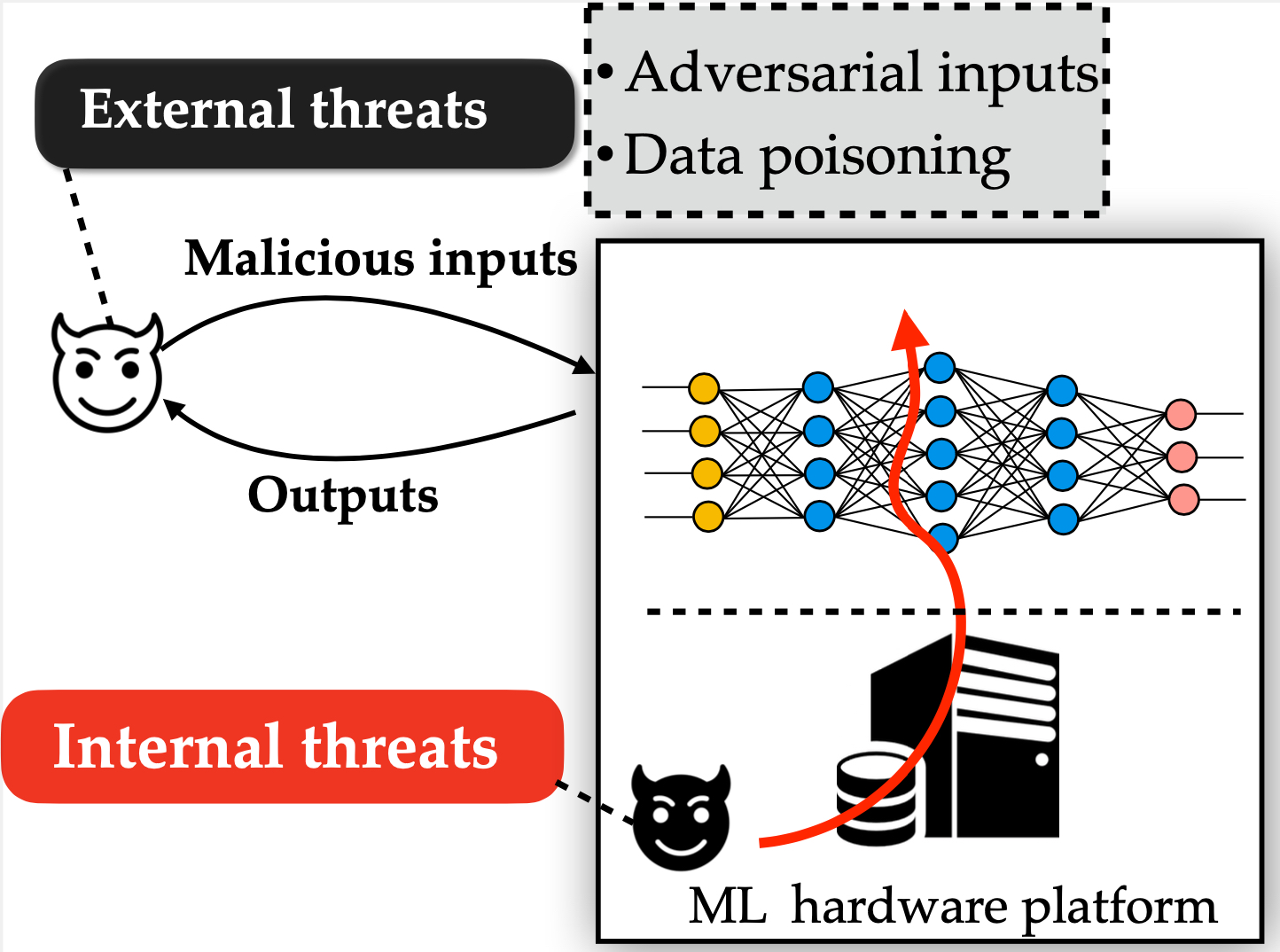

Deep neural network (DNN) is widely deployed for a variety of decision-making tasks such as access control, medical diagnostics, and autonomous driving. Compromise of DNN models can severely disrupt inference behavior, leading to catastrophic outcomes for security and safety-sensitive applications. While a tremendous amount of efforts have been made to secure DNNs against external adversaries (e.g., adversarial examples), internal adversaries that tamper DNN model integrity through exploiting hardware threats (i.e., fault injection attacks) can raise unprecedented concerns. This project aims to offer insights into DNN security issues due to hardware-based fault attacks, and explore ways to promote the robustness and security of future deep learning system against such internal adversaries.

This project targets one critical research topic, namely securing deep learning systems against hardware-based model tampering. Recent advances in hardware fault attacks (e.g., rowhammer) can deterministically inject faults to DNN models, causing bit flips in key DNN parameters including model weights. Such threats can be extremely dangerous as they could potentially enable malicious manipulation of prediction outcomes in the inference stage by the adversary. The project seeks to systematically understand the practicality and severity of DNN model bit flip attacks in real systems and investigate software/architecture level protection techniques to secure DNNs against internal tampering. The study focuses on quantized DNNs which exhibit higher robustness against model tampering. This project will incorporate the following research efforts: (1) Investigate the vulnerability of quantized DNNs to deterministic bit flipping of model weights concerning various attack objectives; (2) Explore algorithmic approaches to enhance the intrinsic robustness of quantized DNN models; (3) Design effective and efficient system and architecture level defense mechanisms to comprehensively defeat DNN model bit flip attacks. This project will result in the dissemination of shared data, attack artifacts, algorithms and tools to the broader hardware security and AI security community.